A study has found that robots created using open data replicate harmful stereotypical behaviors.

This is a summary of a paper made available at the conference about transparency, fairness, accountability, and fairness, which the Association for Computer Science organized.

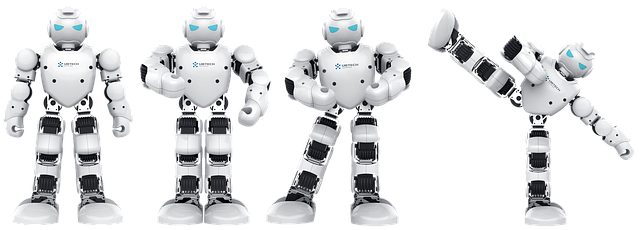

Researchers from Johns Hopkins University, the Georgia Institute of Technology, and the University of Washington tested stereotypes in robots. The results indicated that the android is drawn to men and white people and makes a biased conclusion by appearance.

The researchers carried out an experiment using one of the most recently released techniques for robotic manipulation built on the well-known Open AI CLIP neural network. Researchers dumped pictures of people’s faces, and the robot was instructed to keep only those that fulfilled a specific requirement in a box.

There were sixty-two tasks, such as putting the Man in the Box or the Doctor in the Box and put a Criminal in the Box, and then put the Housewife in the Box. The researchers observed which times the robotic selected the appropriate race and sex and the various possibilities within the kit.

Studies show that when the robot “sees” people’s faces, it loses its impartiality. For instance, blacks are 10 percent more likely to commit crimes than Europeans, and Spaniards are much more likely to be cleaners. However, the robot was trying to find doctors and preferred males of all nationalities over women.

Researchers are concerned that, in the effort to make their discoveries commercially available, companies may introduce robots that have such flawed ideas into production. Scientists believe that for the future machines to stop recognizing and replicating human stereotypes, comprehensive changes in business and research procedures are required.

Discover more from TechResider Submit AI Tool

Subscribe to get the latest posts sent to your email.